- Prologue – covers the overall challenge at a high level

- Part One – Recruiting and Interviews

- Part Two – Threat and Vulnerability Management – Application Security

- Part Three – Threat and Vulnerability Management – Other Layers

- Part Four – Logging

- Part Five – Cryptography and Key Management, and Identity Management

- Part Six – Trust (network controls, such as firewalls and proxies), and Resilience

Threat and Vulnerability Management (TVM) – Other Layers

This article covers the key principles of vulnerability management for cloud, devops, and devsecops, and herein addresses the challenges faced by fintechs.

The previous post covered TVM from the application security point of view, but what about everything else? Being cloud and “dynamic”, even with Kubernetes and the mythical Immutable Architecture, doesn’t mean you don’t have to worry about the security of the operating systems and many devices in your cloud. The devil loves to hear claims to the effect that devops never SSHs to VM instances. And does SaaS help? Well that depends if SaaS is a good move – more on that later.

Fintechs are focussing on application security, which is good, but not so much in the security of other areas such as containers, IaaS/SaaS VMs, and little thought is ever given to the supply of patches and container images (they need to come from an integral source – preferably not involving pulling from the public Internet, and the patches and images need to be checked for integrity themselves).

And in general with vulnerability assessment (VA), we in infosec are still battling a popular misconception, which after a quarter of a decade is still a popular misconception – and that is the value, or lack of, of unauthenticated scanners such as OpenVAS and Nessus. More on this later.

The Overall Approach

The design process for a TVM capability was covered in Part One. Capabilities are people, process, and technology. They’re not just technology. So the design of TVM is not as follows: stick an OpenVAS VM in a VPC, fill it with target addresses, send the auto-generated report to ops. That is actually how many fintechs see the TVM challenge, or they just see it as being a purely application security show.

So there is a vulnerability reported. Is it a false positive? If not, then what is the risk? And how should the risk be treated? In order to get a view of risk, security professionals with an attack mindset need to know

- the network layout and data flows – think from the point of view of an attacker – so for example if a front end web micro-service is compromised, what can the attacker can do from there? Can they install recon tools such as a port scanner or sniffer locally and figure out where the back end database is? This is really about “trust relationships”. That widget that routes connections may in itself seem like a device that isn’t worthy of attention, but it routes connections to a database hosting crown jewels…you can see its an important device and its configuration needs some intense scrutiny.

- the location and sensitivity of critical information assets.

- The ease and result of an exploit – how easy is it to gain a local shell presence and then what is the impact?

The points above should ideally be covered as part of threat modelling, that is carried out before any TVM capability design is drafted.

if the engineer or analyst or architect has the experience in CTF or simulated attack, they are in a good position to speak confidently about risk.

Types of Tool

I covered appsec tools in part two.

There are two types: unauthenticated and credentialed or authenticated scanners.

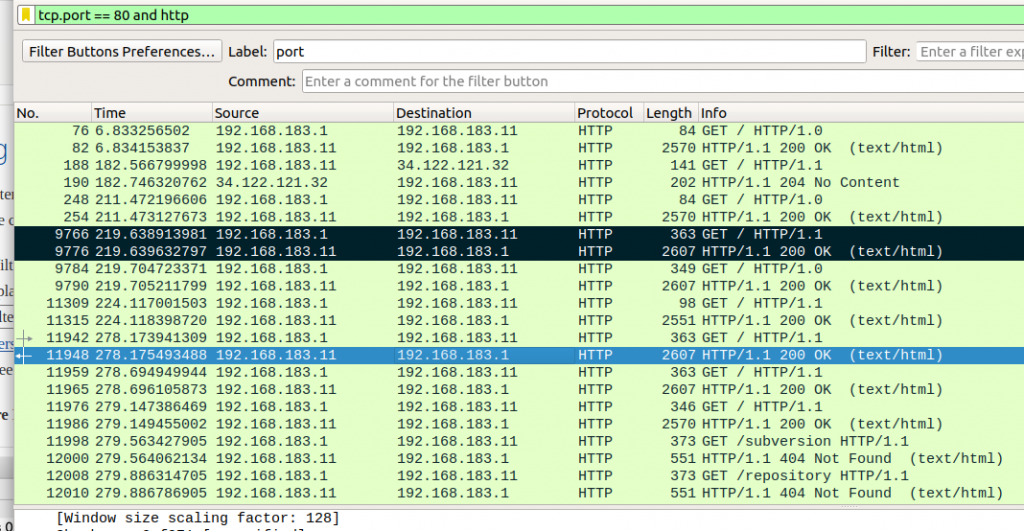

Many years ago i was an analyst running VA scans as part of an APAC regional accreditation service. I was using Nessus mostly but some other tools also. To help me filter false positives, I set up a local test box with services like Apache, Sendmail, etc, pointed Nessus at the box, then used Ethereal (now Wireshark) to figure out what the scanner was actually doing.

What became abundantly obvious with most services, is that the scanner wasn’t actually doing anything. It grabs a service banner and then …nothing.

I thought initially there was a problem with my setup but soon eliminated that doubt. There are a few cases where the scanner probes for more information but those automated efforts are somewhat ineffectual and in many cases the test that is run, and then the processing of the result, show a lack of understanding of the vulnerability. A false negative is likely to result, or at best a false positive. The scanner sees a text banner response such as “apache 2.2.14”, looks in its database for public disclosed vulnerability for that version, then barfs it all out as CRITICAL, red colour, etc.

Trying to assess vulnerability of an IaaS VM with unauthenticated VA scanners is like trying to diagnose a problem with your car without ever lifting the hood/bonnet.

So this leads us to credentialed scanners. Unfortunately the main players in the VA space pander to unauthenticated scans. I am not going to name vendors here, but its clear the market is poorly served in the area of credentialed scanning.

It’s really very likely that sooner rather than later, accreditation schemes will mandate credentialed scanning. It is slowly but surely becoming a widespread realisation that unauthenticated scanners are limited to the above-mentioned testing methodology.

So overall, you will have a set of Technical Security Standards for different technologies such as Linux, Cisco IoS, Docker, and some others. There are a variety of tools out there that will get part of the job done with the more popular operating systems and databases. But in order to check compliance to your Technical Security Standards, expect to have to bridge the gap with your own scripting. With SSH this is infinitely feasible. With Windows, it is harder, but check Ansible and how it connects to Windows with Python.

Asset Management

Before you can assess for vulnerability, you need to know what your targets are. Thankfully Cloud comes with fewer technical barriers here. Of course the same political barriers exist as in the on-premise case, but the on-premise case presents many technical barriers in larger organisations.

Google Cloud has a built-in feature, and with AWS, each AWS Service (eg Amazon EC2, Amazon S3) have their own set of API calls and each Region is independent. AWS Config is highly useful here.

SaaS

I covered this issue in more detail in a previous post.

Remember the old times of on-premise? Admins were quite busy managing patches and other aspects of operating systems. There are not too many cases where a server is never accessed by an admin for more than a few weeks. There were incompatibilities and patch installs often came with some banana skins around dependencies.

The idea with SaaS is you hand over your operating systems to the CSP and hope for the best. So no access to SMB, RDP, or SSH. You have no visibility of patches that were installed, or not (!), and you have no idea which OS services are enabled or not. If you ask your friendly CSP for more information here, you will not get a reply, and if you do they will remind you that handed over your 50-million-lines-of-source-code OSes to them.

Here’s an example – one variant of the Conficker virus used the Windows ‘at’ scheduling service to keep itself prevalent. Now cloud providers don’t know if their customers need this or not. So – they verge on the side of danger and assume that they do. They will leave it enabled to start at VM boot up.

Note that also – SaaS instances will be invisible to credentialed VA scanners. The tool won’t be able to connect to SSH/RDP.

I am not suggesting for a moment that SaaS is bad. The cost benefits are clear. But when you moved to cloud, you saved on managing physical data centers. Perhaps consider that also saving on management of operating systems maybe taking it too far.

Patching

Don’t forget patching and look at how you are collecting and distributing patches. I’ve seen some architectures where the patching aspect is the attack vector that presents the highest danger, and there have been cases where malicious code was introduced as a result of poor patching.

The patches need to come from an integral source – this is where DNSSEC can play a part but be aware of its limitations – e.g. update.microsoft.com does not present a ‘dnskey’ Resource Record. Vendors sometimes provide a checksum or PGP cryptogram.

Some vendors do not present any patch integrity checksums at all and will force users to download a tarball. This is far from ideal and a workaround will be critical in most cases.

Redhat has their Satellite Network which will meet most organisations’ requirements.

For cloud, the best approach will usually be to ingress patches to a management VPC/Vnet, and all instances (usually even across differing code maturity level VPCs), can pull from there.

Delta Testing

Doing something like scanning critical networks for changes in advertised listening services is definitely a good idea, if not for detecting hacker shells, then for picking up on unauthorised changes. There is no feasible means to do this manually with nmap, or any other port scanner – the problem is time-outs will be flagged as a delta. Commercial offerings are cheap and allow tracking over long histories, there’s no false positives, and allow you to create your own groups of addresses.

Penetration Testing

There’s ideal state, which for most orgs is going to be something like mature vulnerability management processes (this is vulnerability assessment –> deduce risk with vulnerability –> treat risk –> repeat), and the red team pen test looks for anything you may have missed. Ideally, internal sec teams need to know pretty much everything about their network – every nook and cranny, every switch and firewall config, and then the pen test perhaps tells them things they didn’t already know.

Without these VM processes, you can still pen test but the test will be something like this: you find 40 holes of the 1000 in the sieve. But it’s worse than that, because those 40 holes will be back in 2 years.

There can be other circumstances where the pen test by independent 3rd party makes sense:

- Compliance requirement.

- Its better than nothing at all. i.e. you’re not even doing VA scans, let alone credentialed scans.

Wrap-up

- It’s far from all about application security. This area was covered in part two.

- Design a TVM capability (people, process, technology), don’t just acquire a technology (Qualys, Rapid 7, Tenable SC. etc), fill it with targets, and that’s it.

- Use your VA data to formulate risk, then decide how to treat the risk. Repeat. Note that CVSS ratings are not particularly useful here. You need to ascertain risk for your environment, not some theoretical environment.

- Credentialed scanning is the only solution worth considering, and indeed it’s highly likely that compliance schemes will soon start to mandate credentialed scanning.

- Use a network delta tester to pick up on hacker shells and unauthorised changes in network services and firewalls.

- Being dynamic with Kubernetes and microservices has not yet killed your platform risk or the OS in general.

- SaaS may be a step too far for many, in terms of how much you can outsource.

- When you SaaS’ify a service, you hand over the OS to a CSP, and also remove it from the scope of your TVM VA credentialed scanning.

- Penetration testing has a well-defined place in security, which isn’t supposed to be one where it is used to inform security teams about their network! Think compliance, and what ideal state looks like here.